I think my recommendation of ML for dummies was an excellent idea. Followed by DL for dummies!He's misunderstanding the role of randomness and conflating concepts from the training process with the final model's output process, thinking that you could create a large distribution of outputs and somehow aggregate/filter them for "correctness", this getting a better output at additional computational cost. But that's not how this works.

AI: The Rise of the Machines... Or Just a Lot of Overhyped Chatbots?

- Thread starter Sanrith Descartes

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- 16,305

- -2,228

We must be using different definitions of the word "rare" because the thing can pass the MBA."The incorrect output, while it happens, is rare"

The incorrect output is always there and its never rare. Without millions of incorrect outputs, the model would not have any sort of accuracy. Unless you mean incorrect output from chatGPT itself? Those arent part of the training data set.

I was not at any point talking about changing the training process. I was completely talking about a way to curate the final output with the current model. I have no idea what I said that made you think I was talking about changing the training model but at no point was it what I was talking about.

Classical was the wrong term perhaps but it is definitely the model that the majority of AI learning algorithms are following these days."the classic model for machine learning being one algorithm that is good at error checking things, the other that keeps trying to adjust its own model until it can fool the error checker"

This is not the classical model of machine learning. This is a model of a encoder-decoder architecture for a deep learning models.

You are far more ignorant than you let on if this is confusing to you. Behind the scenes it's math all the way down. The reason there's a different output each time you put in the same input is because behind the scenes it's using a different random seed. Are you really this ignorant while pretending to be this smart? Because holy shit wormie. I always knew you were arrogant and stupid but this is new levels for you."seeds"

Wut?

"aggregate average"

Wut?

I'm not even gonna bother reading the rest of that comment. You're a moron.

Last edited:

- 1

- 1

- 1

- 16,305

- -2,228

Actually nah I can't help myself, I'll keep going

I never said shit was getting removed from the training data by the algorithm. I was talking about the final output that the user sees. You're grasping at straws to make me look dumb but you don't even understand what the fuck I'm saying. Largely because you are far more ignorant than you realize.anomalous output should be removed"

Outputs are not removed during training. Removing output from chatGPT responses is meaningless as it is outside of training.

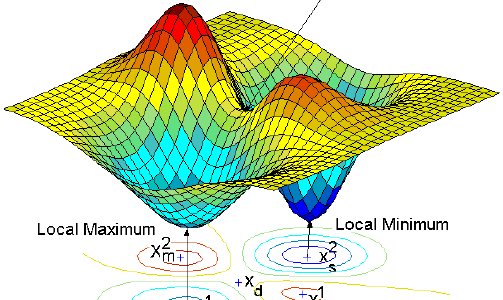

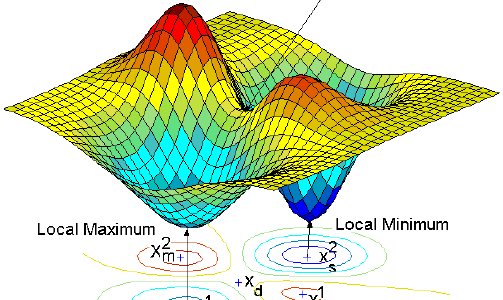

Captain Suave understood it I'm sure. It is obvious. On the spectrum from deterministic to probablistic, noise diffusion is way more probabilistic and large language models are way more deterministic. Which is because you WANT image generation to offer a plethora of results that are then curated, as one of the primary reasons, but also just going back to the way a computer encodes images versus the way it encodes text. Think of the difference in size between a txt file and a high res jpg if that works better for your peanut brain."And obviously the concept works better for large language models than for image generation AI."

Why is it obvious? You are talking about adjusting training inputs which are numerical vectors in image recognization as they are in language recognition. Yeah, in one they are binary and in the other they are gray scale rgb values but still, what is being adjusted and what exactly is obvious about anything you are saying here?

If computing power wasn't limited, then users wouldn't be waiting in queues to log on. Are you seriously this fucking stupid? Yeah sure you can scale it up infinitely if you have infinite dollars. No shit Sherlock. We are talking about the real world tho."My proposal would involve generating something like 10-50 outputs for each input"

What output are you talking about? What input? Computing power isnt limited, just add more GPUs. Time might become a constraint but if you are training a model for a month, you can afford to train for 2 months.

- 2

- 16,305

- -2,228

I wasn't saying anything about the training process. I was completely just talking about ways to adjust the final output. What I was saying involved zero changes to the training data.He's misunderstanding the role of randomness and conflating concepts from the training process with the final model's output process, thinking that you could create a large distribution of outputs and somehow aggregate/filter them for "correctness", thus getting a better output at additional computational cost. Basically some kind of ML perpetual accuracy machine.

- 1

How did this thread go from GPT outputs making fun of Ossoi to this?

This is why we can't have nice things.

This is why we can't have nice things.

- 1

- 18,443

- 49,953

How did this thread go from GPT outputs making fun of Ossoi to this?

This is why we can't have nice things.

- 3

Captain Suave

Caesar si viveret, ad remum dareris.

- 5,926

- 10,164

Yes, I get that.I wasn't saying anything about the training process. I was completely just talking about ways to adjust the final output. What I was saying involved zero changes to the training data.

Look, I'm not personally a ML expert but I've looked over the shoulder of one for 15 years and built a handful of smaller-scale models myself. I understand what you are describing and I promise you that it's just not how this stuff works. Wormie appears to know what he's talking about, and the reason you think he's an idiot is because he's actually doing you the favor of connecting your half-baked idea to real contact points in the development process.

Anyway, I'm out on this debate. Feel free to carry on wondering why no one is already doing this; I can only refer you to previous replies. (No, it's not compute.)

- 3

- 1

- 16,305

- -2,228

He doesn't even know what a random seed is.Yes, I get that.

Look, I'm not personally a ML expert but I've looked over the shoulder of one for 15 years and built a handful of smaller-scale models myself. I understand what you are describing and I promise you that it's just not how this stuff works. Wormie appears to know what he's talking about, and the reason you think he's an idiot is because he's actually doing you the favor of connecting your half-baked idea to real contact points in the development process.

Anyway, I'm out on this debate. Feel free to carry on wondering why no one is already doing this; I can only refer you to previous replies. (No, it's not compute.)

- 16,305

- -2,228

Literally just lazily pasting the first three links after typing "random seed in ai" into google here:

vitalflux.com

vitalflux.com

opendatascience.com

opendatascience.com

Why use Random Seed in Machine Learning? - Analytics Yogi

Data, Data Science, Machine Learning, Deep Learning, Analytics, Python, R, Tutorials, Tests, Interviews, News, AI

vitalflux.com

vitalflux.com

Properly Setting the Random Seed in ML Experiments. Not as Simple as You Might Imagine

Join Comet at Booth 406 in the ODSC East Expo Hall. We will also be speaking at ODSC: – April 30, 9 am — A Deeper Stack for Deep Learning: Adding Visualizations + Data Abstractions to your Workflow (Douglas Blank, Head of Research) [Training Session] – May 2, 2:15 pm —...

Daidraco

Avatar of War Slayer

- 11,016

- 11,845

Dont leave!!! You can make it all better by replying with Chat GPT!Yes, I get that.

Look, I'm not personally a ML expert but I've looked over the shoulder of one for 15 years and built a handful of smaller-scale models myself. I understand what you are describing and I promise you that it's just not how this stuff works. Wormie appears to know what he's talking about, and the reason you think he's an idiot is because he's actually doing you the favor of connecting your half-baked idea to real contact points in the development process.

Anyway, I'm out on this debate. Feel free to carry on wondering why no one is already doing this; I can only refer you to previous replies. (No, it's not compute.)

- 1

Those links are talking about setting the seed for the pseudo random number generators in order to gain exact output of models instead of random one. This is done when building anything that relies on randomness in order to verify correctness. These links have nothing to do with what you were talking about.. Shut the fuck up nimrod,Literally just lazily pasting the first three links after typing "random seed in ai" into google here:

Why use Random Seed in Machine Learning? - Analytics Yogi

Data, Data Science, Machine Learning, Deep Learning, Analytics, Python, R, Tutorials, Tests, Interviews, News, AIvitalflux.com

Properly Setting the Random Seed in ML Experiments. Not as Simple as You Might Imagine

Join Comet at Booth 406 in the ODSC East Expo Hall. We will also be speaking at ODSC: – April 30, 9 am — A Deeper Stack for Deep Learning: Adding Visualizations + Data Abstractions to your Workflow (Douglas Blank, Head of Research) [Training Session] – May 2, 2:15 pm —...opendatascience.com

The other post I am not going to address except to say that the reason you think Vanessa was a good poster is because he too argued about subjects he knew nothing about. You are a non fag version of Vanessa and even that is questionable.

- 1

- 1

- 16,305

- -2,228

Right, they're about setting exact random seeds instead of letting the computer decide. If you don't put in your own random seed, the computer uses an algorithm to generate a pseudorandom one (often based on things like server time or other variables -- think back to people who learned to game /random in EverQuest if that helps you understand).Those links are talking about setting the seed for the pseudo random number generators in order to gain exact output of models instead of random one. This is done when building anything that relies on randomness in order to verify correctness. These links have nothing to do with what you were talking about.. Shut the fuck up nimrod,

- 16,305

- -2,228

Just admit you had no idea that machine learning involved hidden variable random seeds and we can move on instead of shitting up yet another thread where people assume that just because of my reputation that I must be the one that's wrong. You are wrong. Machine learning output involves a random seed for every prompt. You're just a moron.

“Shouldn't checking multiple random seeds against each other and then producing/assuming the result with the fewest errors based on the averages there end up outputting far more accurate results?”Just admit you had no idea that machine learning involved hidden variable random seeds and we can move on instead of shitting up yet another thread where people assume that just because of my reputation that I must be the one that's wrong. You are wrong. Machine learning involves a random seed for every prompt. You're just a moron.

No shit machine learning uses a seed to generate random values. Anything that generates such values uses a seed. A seed is not set manually any time one actually trains machine learning models. Setting a random seed to a value results in exactly the same output every time anything random is done. If the random seed you were talking about is the setting for the pseudo number generator then you are even a bigger retard than we all think you are. You are literally saying that we should remove randomness from models when training and compare exact outputs and accept these supposedly probabilistic results as such. If this is thr case then you are a massive fucking retard. But no one is this stupid, not even you, so you are just a retard that read a blog posts and thinks now he knows something and is actually fucking arguing about it when it’s obvious to anyone that you are talking ou of your ass. Go away.

Last edited:

Daidraco

Avatar of War Slayer

- 11,016

- 11,845

- 16,305

- -2,228

Your earlier posts contained things like "lol what is a seed?" You clearly are backpedaling. Not going to bother reading the rest of that comment. I think I might just finally put you on ignore.No shit machine learning uses a seed to generate random values.

Thats because "seed" made no sense in the context of what you were saying you fucking retard. Shut the fuck up.Your earlier posts contained things like "lol what is a seed?" You clearly are backpedaling. Not going to bother reading the rest of that comment. I think I might just finally put you on ignore.

- 1

- 1

- 16,305

- -2,228

It would have made sense if you knew what the fuck you were talking about. Each individual response to a prompt has a random seed attached. Trying multiple seeds and filtering out the portions of each seed that don't match the average output will lead to more accurate results. Make sense now? It just would take multiplicatively more processing power and they already can't keep up with user demand.Thats because "seed" made no sense in the context of what you were saying you fucking retard. Shut the fuck up.

- 1

TomServo

<Bronze Donator>

- 8,484

- 14,871

Now I hope he starts talking out his ass about IVs and encryption“Shouldn't checking multiple random seeds against each other and then producing/assuming the result with the fewest errors based on the averages there end up outputting far more accurate results?”

No shit machine learning uses a seed to generate random values. Anything that generates such values uses a seed. A seed is not set manually any time one actually trains machine learning models. Setting a random seed to a value results in exactly the same output every time anything random is done. If the random seed you were talking about is the setting for the pseudo number generator then you are even a bigger retard than we all think you are. You are literally saying that we should remove randomness from models when training and compare exact outputs and accept these supposedly probabilistic results as such. If this is thr case then you are a massive fucking retard. But no one is this stupid, not even you, so you are just a retard that read a blog posts and thinks now he knows something and is actually fuck youing arguing about it when it’s obvious to anyone that you are talking ou of your ass. Go away.

Share: