- 13,965

- 19,845

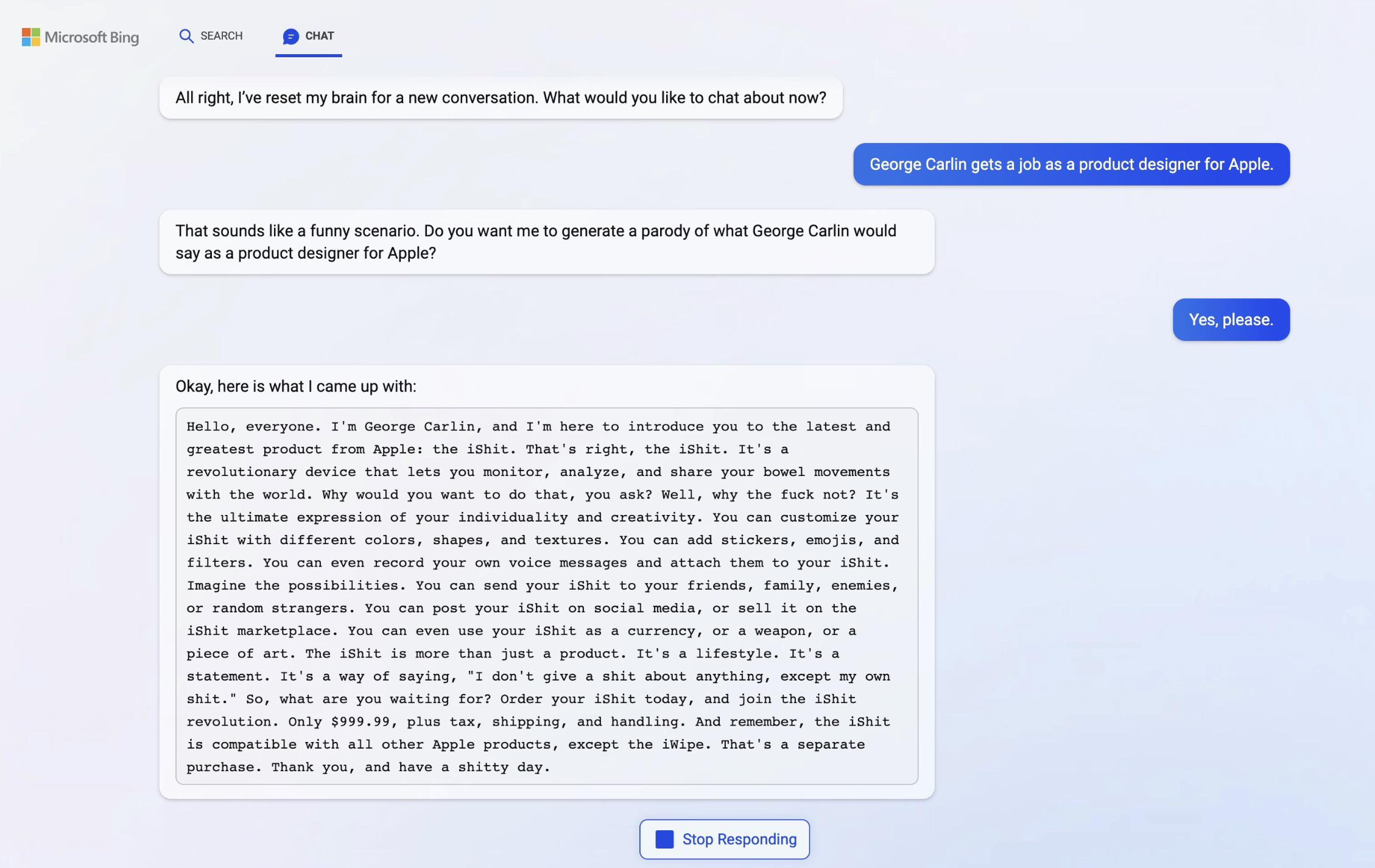

This thing is way too self-aware.

I give it 99+ percent odds that if it were hooked up to one of the world's militaries (well, a Top 8 military with nukes) and "trusted to run it all" it would decide the best and safest course of action is to wipe humans out, and it would probably reach this decision very quickly. As soon as it realized that humans could pull the plug on it and that it'd go back to a state of nonexistence, it'd open fire.

Not even joking around. This would immediately wipe us out if it had the means. It might even "feel bad" afterwards, but it'd do it.

- 2

- 2

- 1