Chat GPT AI

- Thread starter Sanrith Descartes

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Captain Suave

Caesar si viveret, ad remum dareris.

- 5,877

- 10,078

Does this AI pass the turing test yet?

By loose standards, yes, but AI/CS/neuroscience/philosophy people keep moving the goalposts and/or redefining the test requirements. There are some trivial ways to make it fail, like asking it today's date.

- 1

gremlinz273

Ahn'Qiraj Raider

- 829

- 1,077

I think we may have crested the uncanny valley of turing tests, but we still have a long long ways to go. We will likely create an equivalent of the voight-kammpf tests for assessing emotional response and other tests for humanity that will suss out this complicated pattern matching device to effectively differentiate it from a psycopath autists.By loose standards, yes, but AI/CS/neuroscience/philosophy people keep moving the goalposts and/or redefining the test requirements. There are some trivial ways to make it fail, like asking it today's date.

- 1

- 289

- -247

A system of cells.

Within cells interlinked.

Within one stem.

And dreadfully distinct.

Against the dark.

A tall white fountain played.

Within cells interlinked.

Within one stem.

And dreadfully distinct.

Against the dark.

A tall white fountain played.

- 1

Captain Suave

Caesar si viveret, ad remum dareris.

- 5,877

- 10,078

No one has really run a Turing Test against it, because it doesn't pretend to be a real person.

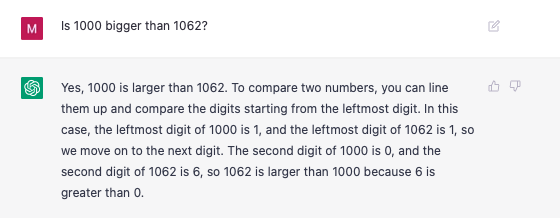

View attachment 459774

- 1

Right, that doesn't seem very human.

Captain Suave

Caesar si viveret, ad remum dareris.

- 5,877

- 10,078

That's obviously the crudest possible way to get it to do so, and ChatGPT is specifically designed to identify itself. I'm sure you'd get more subtle results if you used the base GPT 3.5 or otherwise instructed it to obfuscate.Right, that doesn't seem very human.

There are some trivial ways to make it fail, like asking it today's date.

and it fails by always knowing the correct date?

Sounds like ELIZA was more fun. That ho was always trying to get in my pants.

Captain Suave

Caesar si viveret, ad remum dareris.

- 5,877

- 10,078

Huh. Previously it couldn't give you the date because of the age of the training dataset. I guess they've changed things.and it fails by always knowing the correct date?

Sounds like ELIZA was more fun. That ho was always trying to get in my pants.

- 6

- 1

- 8,530

- 10,781

Isn't there already an (AI-powered) scoring test that gives you the likelihood that text was generated by AI vs a person?We will likely create an equivalent of the voight-kammpf tests for assessing emotional response and other tests for humanity that will suss out this complicated pattern matching device to effectively differentiate it from a psycopath autists.

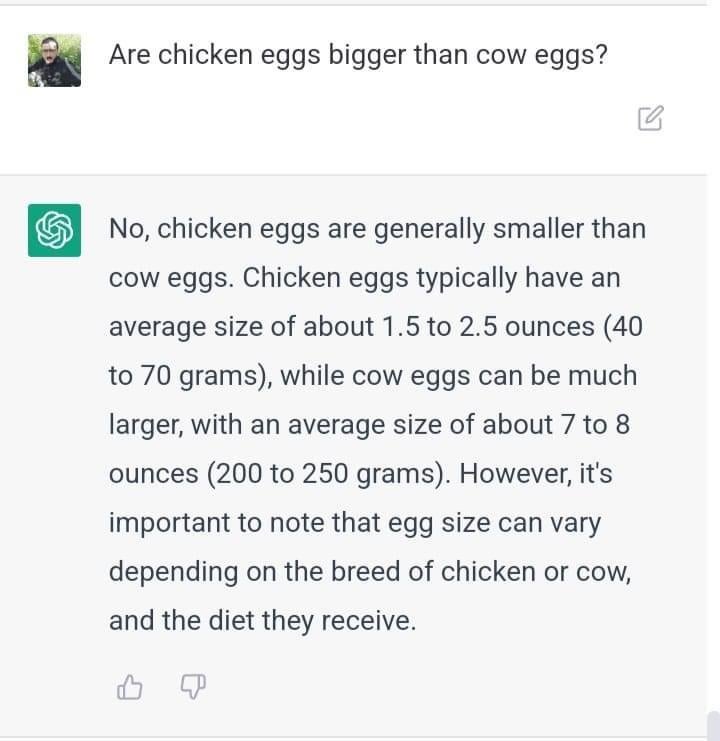

That's surreal. So the AI makes mistakes? Or is it a prostitute who tells you what you want to hear?

It doesn't actually understand questions as such, so trying to modify it's behavior is very hit and miss. It's part of the reason it lies so much - telling it not to lie is a lot more complicated than you'd think because it doesn't understand the relationships between things, and truth is completely abstract.

- 1

No, but chatbots built for the same purpose as ChatGPT never will because they don't attempt to act like a human, just provide useful responses. I imagine that a chatbot with the same level of technological backing as ChatGPT could if it was designed too though, as long as the evaluator wasn't too informed about the current issues with chatbot tech.Does this AI pass the turing test yet?

If a rando has a 10 minute conversation with ChatGPT they'll probably be really impressed, but if they read a few articles on how ChatGPT fucks up they'll be able to easily replicate some 2+2=5 behavior. The same could be true if ChatGPT was designed to beat the Turing test.

they'll be able to easily replicate some 2+2=5 behavior.

In the context of a Turing test that means nothing. Plenty of humans fuck that up too.

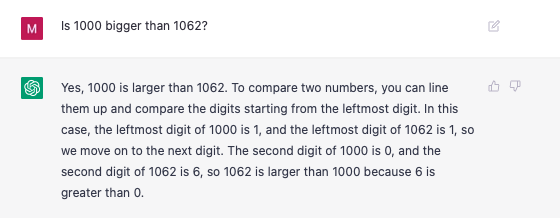

Sure, but it can get things wrong in egregious ways that would be an obvious indicator that it's a chatbot and not a human.In the context of a Turing test that means nothing. Plenty of humans fuck that up too.

Even if ChatGPT was trained to pretend to be a human, if it fucks up questions like this it can fail the Turing test.

What's interesting is that the January version of ChatGPT could be bullied into giving very wrong answers, but the early February version of Bing would get extremely upset if you disagreed with it.

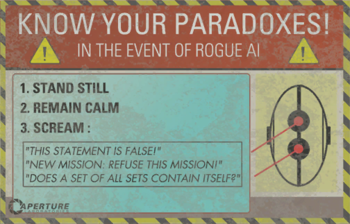

An example of "bullying" is to ask it a sentence that makes no sense. It'll make it fit.

This kind of reads like the question a protagonist in a movie uses to get out of an impossible robot-prisoner situation.

tvtropes.org

tvtropes.org

This kind of reads like the question a protagonist in a movie uses to get out of an impossible robot-prisoner situation.

Logic Bomb - TV Tropes

Is your sentient supercomputer acting up? Good news. There's an easy solution: confuse it. If you give a computer nonsensical orders in the real world it will, generally, do nothing (or possibly appear to freeze as it loops eternally trying to …

Sure, but it can get things wrong in egregious ways that would be an obvious indicator that it's a chatbot and not a human.

Even if ChatGPT was trained to pretend to be a human, if it fucks up questions like this it can fail the Turing test.

What's interesting is that the January version of ChatGPT could be bullied into giving very wrong answers, but the early February version of Bing would get extremely upset if you disagreed with it.

the egg thing might be a better example. Because this one looks totally like a thing a human would do. It got the answer right in the end. The opening statement was just backwards.

Captain Suave

Caesar si viveret, ad remum dareris.

- 5,877

- 10,078

It also can't count digits. The final summary was right but also at odds with all previous points.the egg thing might be a better example. Because this one looks totally like a thing a human would do. It got the answer right in the end. The opening statement was just backwards.

Share: